We Will Not Accidentally Create AGI

July 6, 2025

We need to give ourselves more credit as complex systems.

Many lay people intuitively understand that LLMs like ChatGPT are not about to become an AGI by just feeding more compute to them. Yet people intimately involved with the technology, including prominent people in the AI business like Elon Musk and Sam Altman, continually proclaim that we are months or short years away from computer superintelligence. They seem to believe that the distance between current best LLMs and AGI is more compute, or perhaps some model tweaks, or at most one more theoretical advancement. For example, Elon Musk recently claimed in an interview with YCombinator that "we're quite close to digital superintelligence. If it doesn't happen this year, then next year for sure". This exuberance regarding digital superintelligence is espoused by many prominent tech figures. You might say that of course AI CEOs will hype AI. Still, whatever these mens' motives, they are fanning the flames of an AI fanaticism that is very real.

The only real general intelligence that we know of is our own. Naturally, when evaluating the LLMs and other artificial intelligences that we have created we tend to compare them to ourselves. There has been much fanfare and maybe more anxiety each time machines become apparently better than us at some task, such as the LSAT. Of course, this anxiety dates to even before Deep Blue beat Garry Kasparov at Chess. With the advent of LLMs, it appears that computers are now able to understand concepts and their relations to each other. An LLM is a vast network of nodes and connections–a map of reality. Like all maps, it is imperfect, though this alone is not a reason to discount them. Our own understanding of reality is infinitely limited and often incorrect.

The question remains, how does the human mind settle on valuable ideas? An LLM’s next token of output is determined based on the training material it has been provided. A human has no such guidance in many situations. It’s popular to believe that most people do not do anything “new” in their jobs; they are only applying what they have been taught to the task at hand. From this it would follow that the only people whose livelihoods are safe from AI are the handful of people globally who work to produce new theoretical knowledge for humanity. All the rest of us are doomed: the LLMs are nipping at our heels, and they only need more powerful hardware before they catch us. But this is mistaken. Every situation is “new” in some way, and since the artificial thinkers we have created are untethered from reality and connection to “value”, they are hopeless at navigating this. This means that human intelligence and AI intelligence are categorically different. At least, our own intelligence contains something which is not captured at all by the LLM mind: a feeling for value.

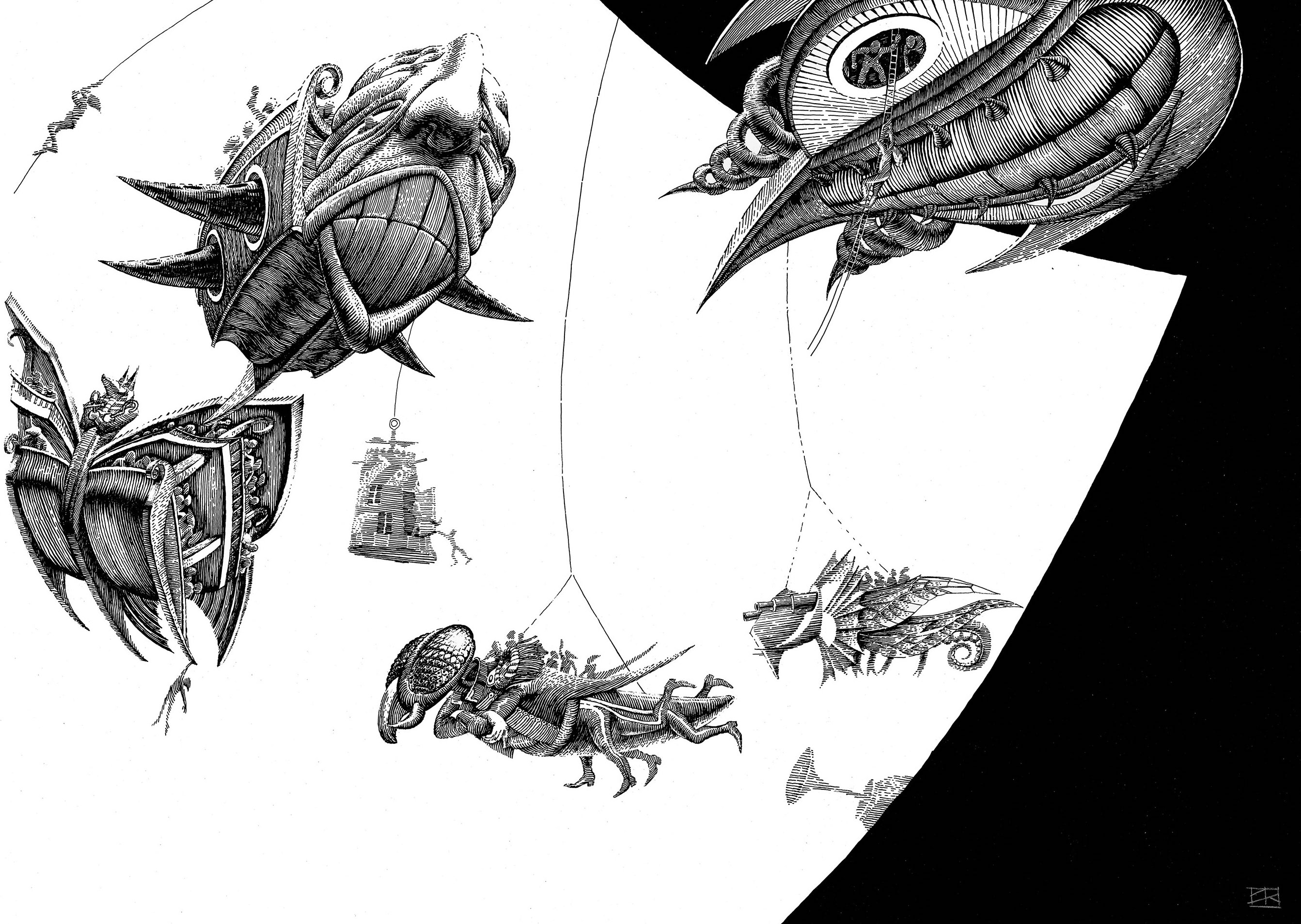

What enables us to recognize value? Our desires guide us in identifying the problems that are worth solving, satisfaction is a feeling that makes us understand when a given problem has been solved–the belly guides the head. LLMs are a head with no belly. Consider yourself as a complex system arising out of the interaction of trillions of individual cells. These cells coordinate together for the mutual satisfaction of their desires, analogous to how humans form states for the same purpose. The state controls its people, but the people also guide and constrain the state. The state is nothing without its people. The head guides the body, but the body guides the head. The head is nothing without its body.

The mistake in believing that LLMs will become general intelligences (recognizable “selves” like humans) given enough compute or enough training is mistaking our central nervous systems as the only intelligent (value discerning) part of our selves. In reality, we are bottom-up systems, value discerning all the way to the cellular level. In order to push the frontiers of knowledge, or even to apply existing knowledge to new situations (again, all situations are new in some way), LLMs need to have a feeling for value. This is of course a strictly human judgement, which is to say that the question of what is valuable exists only because of our biological reality, and our existence in the real universe.

LLMs as they exist in 2025 are already immensely useful. But their use is in being an augmentation to the human mind, which still has a monopoly on discerning value in any problem space, not just at the theoretical frontier of knowledge. Even the work of a garbage collector, or an assembly line worker, or a taxi driver, is guaranteed to produce unforeseen circumstances which would require a general problem solver. This is not to say that these jobs won’t see significant automation, but it does mean that there will always be a human backstop behind these systems in order to help AI tools when they get stuck. Our civilization has been automating work for a long time. LLMs are best understood as a continuation of this habit.